FlashGrid Cluster

for Oracle Failover HA on Google Cloud

Architecture Overview

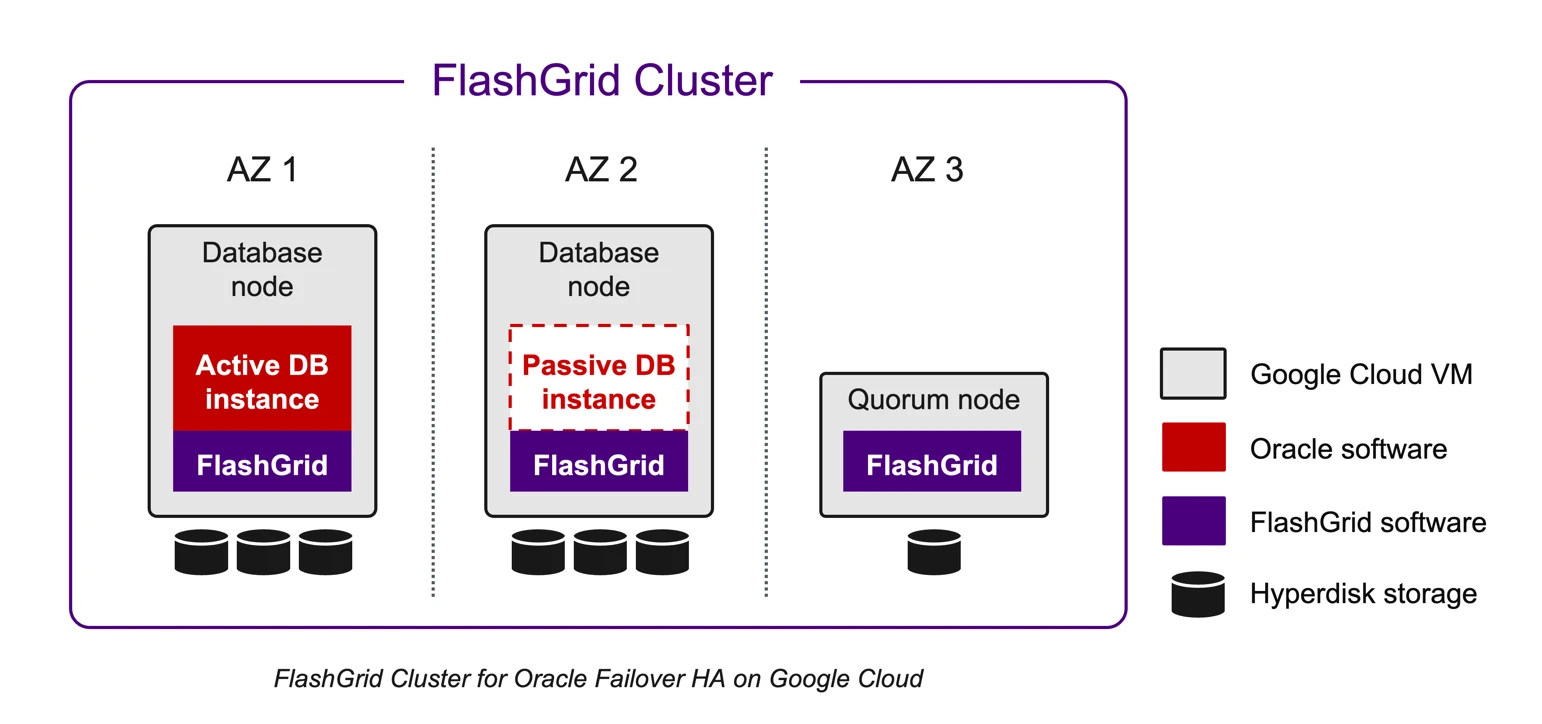

FlashGrid Cluster for Oracle Failover HA enables achieving uptime SLA of 99.95% for Oracle Databases running on Google Cloud virtual machines through enhanced reliability of the database servers, fast failure isolation, and rapid failover between availability zones. FlashGrid Cluster is delivered as a fully integrated Infrastructure-asCode template that can be customized and deployed to your Google Cloud account in a few clicks. It is fully customer-managed and does not restrict any Oracle Database functionality.

Key components of FlashGrid Cluster for Oracle Database Failover HA on Google Cloud include:

FlashGrid Cluster architecture highlights:

Multi-AZ for Maximum Uptime and Fault Tolerance

Google Cloud consists of multiple independent regions. Each region is partitioned into several availability zones. Each availability zone consists of one or more discrete data centers housed in separate facilities, each with redundant power, networking, and connectivity. Availability zones are physically separate, such that even extremely uncommon disasters such as fires or flooding would only affect a single availability zone.

Although availability zones within a region are geographically isolated from each other, they have direct lowlatency network connectivity between them. The network latency between availability zones is generally lower than 1ms. This makes it possible to use synchronous mirroring of data between AZs and achieve zero RPO in case of a failover.

Spreading cluster nodes across multiple availability zones helps to minimize downtime even when an entire data center experiences an outage or a local disaster. FlashGrid recommends using multi-AZ cluster configurations unless there is a specific need to use a single availability zone.

Possible Cluster Configurations

In most cases, a multi-AZ cluster with two database nodes (1+1 redundancy) is recommended for Failover HA (see the diagram above). 2-way data mirroring is used with Normal Redundancy ASM disk groups. An additional small Google Cloud VM (quorum node) is required to host quorum disks. Such a cluster can tolerate the loss of any one node without incurring database downtime.

FlashGrid Cluster can also be deployed in other configurations for specific use-cases:

Multiple databases can share one FlashGrid Cluster as separate databases or as pluggable databases in a multitenant container database. For larger databases and for high-performance databases, dedicated clusters are typically recommended for minimizing interference.

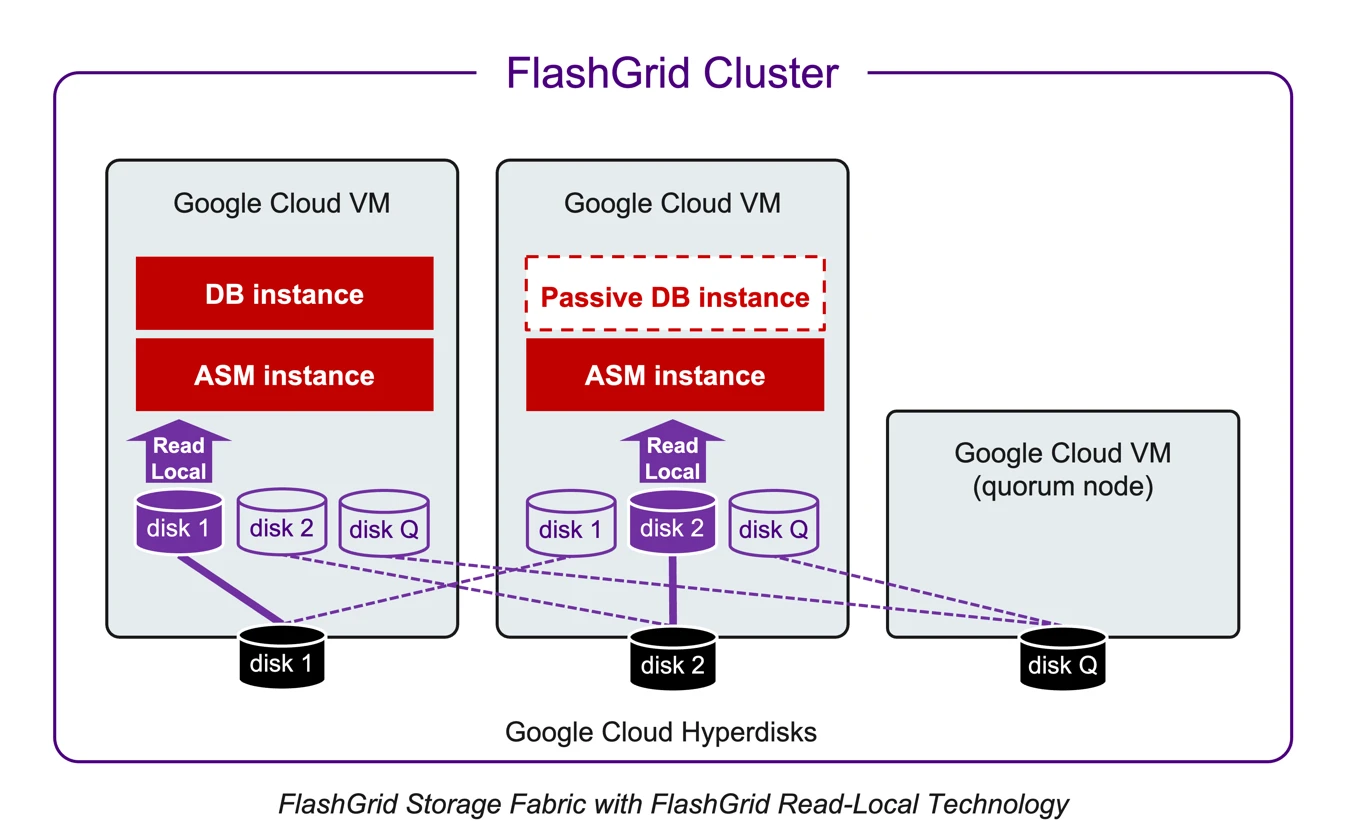

Shared Storage Architecture

FlashGrid Storage Fabric software turns Google Cloud Hyperdisks attached to individual Google Cloud VMs into shared disks accessible from all nodes in the cluster. The sharing is done at the block device level with concurrent access from all nodes.

FlashGrid Read-Local Technology

In a cluster with two or three database nodes, each database node has a full copy of user data stored on Google Cloud Hyperdisks attached to that database node. The FlashGrid Read-Local™ Technology allows serving all read I/O from the locally attached Hyperdisks. Read requests avoid the extra network hop, thus reducing read latency and the amount of network traffic. As a result, more network bandwidth is available for the write I/O traffic.

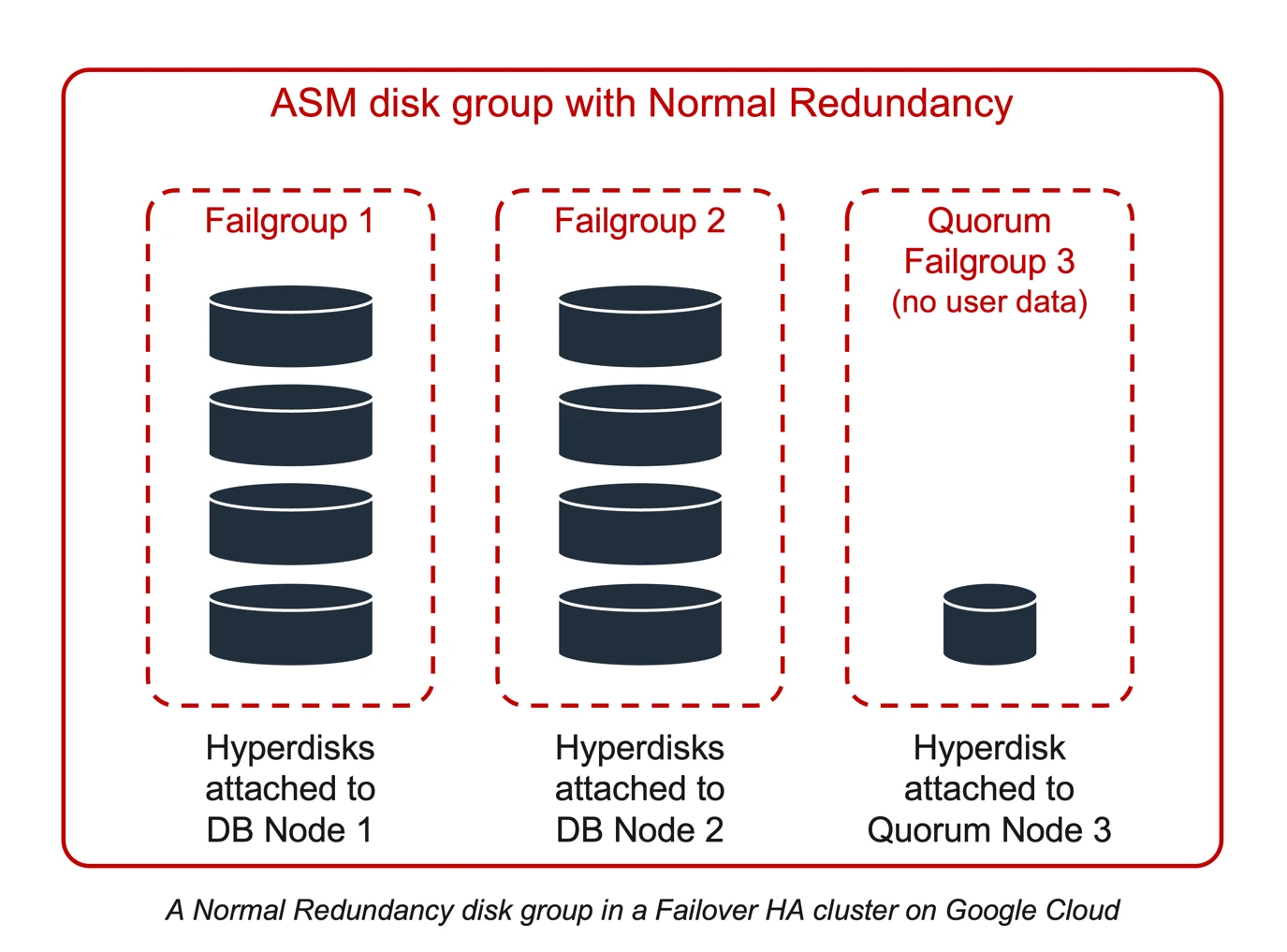

ASM disk group structure and data mirroring

FlashGrid Storage Fabric leverages proven Oracle ASM capabilities for disk group management, data mirroring, and high availability.

In a cluster with two database nodes, all disk groups are configured with Normal Redundancy. In the Normal Redundancy mode, each block of data has two mirrored copies. Each ASM disk group is divided into failure groups – one regular failure group per database node plus one quorum failure group. Each disk is configured to be a part of a failure group that corresponds to the node where the corresponding Hyperdisk is attached.

ASM stores mirrored copies of each block in different failure groups. Thus, the cluster can continue running and access data when any one of the disks fails, or any one of the nodes goes down. Since the data mirroring is done synchronously, there is no data loss in the event of an abrupt failure.

High Availability and Uptime Considerations

FlashGrid Storage Fabric and FlashGrid Cloud Area Network™ have a fully distributed architecture with no single point of failure. Additionally, the FlashGrid Cluster architecture leverages HA capabilities built in Oracle Clusterware and ASM.

Node availability

Because Google Cloud VMs can move between physical hosts, a failure of a physical host causes only a short outage for the affected node. The node VM will automatically restart on another physical host. This significantly reduces the risk of double failures.

A single-AZ configuration provides protection against the loss of a database node. It is an efficient way to accommodate planned maintenance (e.g., database or OS patching) without causing database downtime. However, the potential failure of a resource shared by multiple VMs in the same availability zone, such as network, power, or cooling, may cause database downtime.

Placing VMs in different availability zones minimizes the risk of simultaneous node failures.

Data availability with Google Cloud Hyperdisks

A Google Cloud Hyperdisk provides persistent storage that survives a failure of the VM that the disk is attached to. After the failed VM restarts on a new physical node, all its disks are re-attached with no data loss.

Google Cloud Hyperdisks have built-in redundancy that protects data from failures in the underlying physical media. In the FlashGrid Cluster architecture, Oracle ASM performs data mirroring on top of the built-in protection of Google Cloud Hyperdisks. Together, Google Cloud Hyperdisks and ASM’s mirroring provide durable storage with two layers of data protection, which exceed the typical levels of data protection in on-premises deployments.

Zero RPO

Data is mirrored across 2+ nodes in a synchronous manner. In case a node fails, no committed data is lost.

Fast failure detection and failover time

In the event of a failure of an active database node or database instance, the database instance can be immediately started (failed over) on the second node.

The time to restore the database service after an unexpected failure consists of the time to detect the failure plus the time to restart the database instance on the second node.

The time to detect the failure is between 30 to 60 seconds. FlashGrid Cluster uses several techniques to reduce the detection time in various scenarios, including VM failure, disk failure, network failure, out-of-memory, or a different type of OS-level failure. The fast failure detection is an important benefit of the FlashGrid Cluster architecture. Other implementations of Oracle Database on Google Cloud may suffer from long outages because of their limited abilities to detect certain failure types.

The time to start the database instance on the second node depends on the size of the redo log that must be reapplied and the number of uncommitted transactions that must be undone. Essentially, this time depends on the transaction rate at the time of the failure and the sizing of the Google Cloud VM and the Google Cloud Hyperdisks. The recovery time can be reduced by using higher-performance VMs and disks.

With proper sizing of resources, FlashGrid Cluster enables Recovery Time Objective (RTO) as low as 120 seconds for essentially all types of failures, including the more complicated scenarios, such as system disk failure, running out-of-memory, etc.

Uptime SLA

Google Cloud states an uptime SLA of 99.99% for two VMs running in different availability zones. By using the multi-AZ architecture, FlashGrid Cluster enables achieving the 99.95% or greater uptime SLA at the database service level.

The 99.95% uptime SLA assumes downtime of no more than 4 hours per year. In the FlashGrid Cluster for Failover HA architecture, database instance startup time during a failover is the biggest potential contributor to database downtime. Therefore, measuring the startup time during crash-recovery under typical and peak application loads and proper sizing of the VM and disk resources is important for minimizing downtime and meeting the uptime SLA.

FlashGrid Health Checker tool helps to minimize preventable failures by checking for possible mistakes in the database and cluster configuration.

Note: For an uptime SLA of 99.99% or greater and for zero-downtime maintenance, FlashGrid recommends using FlashGrid Cluster for Oracle RAC on Google Cloud that provides active-active HA.

Active Reliability Framework

Ensuring reliable operation of each database node is an integral part of maintaining highly available operation and achieving the target uptime SLA. Also, unexpected failures must be quickly contained to minimize disruption.

FlashGrid Active Reliability Framework provides a layer of failure protection and prevention at the database node level:

Performance Considerations

Multiple availability zones

Using multiple availability zones (AZs) provides substantial availability advantages. However, it does increase network latency because of the distance between the AZs. The network latency between AZs is less than 1ms in most cases and will not have a critical impact on the performance of many workloads. For example, in the useast-1 region, we measured latencies between all Availability Zones under 0.2ms.

Read-heavy workloads will experience zero or little impact because all read traffic is served locally and does not use the network between AZs.

Note that differences in latency between different pairs of AZs provide an opportunity for optimization by choosing which AZs to place database nodes in. In a 2-node cluster, it is optimal to place database nodes in the two AZs with the lowest latency between them. See our knowledge base article for more details.

Storage performance

Storage throughput per node can achieve up to 10,000 MBPS and 320,000 IOPS with C4 instance types and Hyperdisk Balanced disks.

For databases that require even higher storage throughput, additional storage nodes may be used to achieve up to 32,000 MBPS storage throughput. This enables the use of extra-large databases with hundreds of terabytes of data.

In most cases, the use of Hyperdisk Balanced storage is recommended. The performance of each Hyperdisk Balanced disk can be configured from 3,000 to 160,000 IOPS and 140 to 2,400 MBPS. By using multiple disks per disk group attached to each database node, the storage throughput can reach the maximum for the VM type and size.

Performance vs. on-premises solutions

Google Cloud Hyperdisk storage is flash-based and provides an order of magnitude improvement in IOPS and latency compared to traditional spinning hard drive-based storage arrays. With up to 320,000 IOPS and 10,000 MBPS per node, the performance is higher than a typical dedicated all-flash storage array. It is important to note that the storage performance is not shared between multiple clusters. Every cluster has its own dedicated set of Hyperdisks, which ensures stable and predictable performance with no interference from noisy neighbors.

Full Control of the Database and OS

FlashGrid Cluster is fully customer-managed. This allows more interoperability, control, and flexibility than DBaaS offerings. Customer-assigned administrators have full (root) access to the VMs and the operating system. Additional third-party tools, connectors, monitoring, or security software can be installed on the cluster nodes for compliance with corporate or regulatory standards.

Short Learning Curve for Oracle DBAs

Management of the Oracle databases running on FlashGrid Cluster uses standard Oracle tools and services that include Oracle Clusterware, ASM, RMAN, Data Guard, GoldenGate, etc. All database features are available. The patching process is the same as in a typical on-premises environment. FlashGrid Cluster adds tools and services for managing the cluster configuration and maintaining its reliable operation. However, there is no need to learn any new proprietary procedures for performing standard DBA functions.

Optimizing Oracle Database licenses

For customers on a per-CPU Oracle licensing model, optimizing the number of Oracle licenses may be an important part of managing costs. With FlashGrid Cluster, the following options are available to optimize Oracle Database licensing:

Disaster Recovery Strategy

An optimal Disaster Recovery (DR) strategy for Oracle databases will depend on the higher-level DR strategy for the entire application stack.

In a Multi-AZ configuration, FlashGrid Cluster provides protection against a catastrophic failure of an entire data center. However, it cannot protect against a region-wide outage or an operator error causing the destruction of cluster resources. The most critical databases may benefit from having one or more replicas as part of the DR strategy. The most common replication tool is (Active) Data Guard, but other tools can be used.

The replica(s) may be placed in a different region and/or in the same region:

A standalone (no clustering) database server may be used as a standby replica. However, using an identical clustered setup for the standby provides the following benefits:

Security

System and data access

FlashGrid Cluster is deployed on Google Cloud VMs in the customer’s Google Cloud account and managed by the customer. The deployment model is similar to running your own Google Cloud VMs and installing FlashGrid software on them. FlashGrid staff has no access to the systems or data.

OS hardening

OS hardening can be applied to the database nodes (as well as to quorum/storage nodes) for security compliance. Customers may choose to use their own hardening scripts or FlashGrid’s scripts available for CIS Server Level 1 aligned hardening.

Data Encryption

All data on the Hyperdisk storage is encrypted at rest with either Google-managed or customer-managed encryption keys.Oracle Transparent Data Encryption (TDE) can be used as a second data encryption layer if the corresponding Oracle license is available.

TCPS

Customers requiring encrypted connectivity between database clients and database servers can configure TCPS for client connectivity.

Compatibility

Software versions

The following versions of software are supported with FlashGrid Cluster:

Google Cloud VM types

Database node VMs must have 4+ physical CPU cores (8+ vCPUs) and 32+ GB of memory. The following VM types are recommended for database nodes: C4, C3, C3D, M3. In the regions where the recommended VM types are not available yet, N2 or N2D VMs may be used.

Quorum nodes require fewer resources than database nodes, a single CPU core is sufficient. The c3-standard-2 (1 physical core) VM type is recommended for use as a quorum node. Note that no Oracle Database software is installed on the quorum node.

Supported Disk Types

Hyperdisk Balanced disks are recommended for the majority of deployments. With M3 VMs, Hyperdisk Extreme may be used to achieve the maximum MPBS throughput.

With older N2 or N2D VM types that do not support Hyperdisk Balanced, Persistent Disks may be used instead. Pd-balanced is typically recommended in such cases. Pd-ssd, pd-standard, or pd-extreme may be considered in some special cases.

Only zonal disks are used. Data mirroring across availability zones is done at the ASM disk group level.

Database features

FlashGrid Cluster does not restrict the use of any database features. DBAs can enable or disable database features based on their requirements and available licenses.

Database tools

Various database tools from Oracle or third parties can be used with Oracle RAC databases running on FlashGrid Cluster. This includes RMAN and RMAN-based backup tools, Data Guard, GoldenGate, Cloud Control (Enterprise Manager), Shareplex, and DBvisit.

Shared file systems

The following shared file access options can be used with FlashGrid Cluster:

Automated Infrastructure-as-Code deployment

The FlashGrid Launcher tool automates the process of deploying a cluster. It provides a flexible web-interface for defining cluster configuration and generating a Google Cloud Deployment Manager template for it. The following tasks are performed automatically using the Deployment Manager template:

The entire deployment process takes approximately 90 minutes. After the process is complete the cluster is ready for creating databases. Human errors that could lead to costly reliability problems and compromised availability are avoided using automatically generated and standardized Infrastructure-as-Code templates.

Generating templates via REST API

The entire deployment process can be fully automated without manually using the FlashGrid Launcher’s web GUI, using its REST API instead to generate Deployment Manager templates.

Contact Information

For more information, please get in touch with FlashGrid at info@flashgrid.io